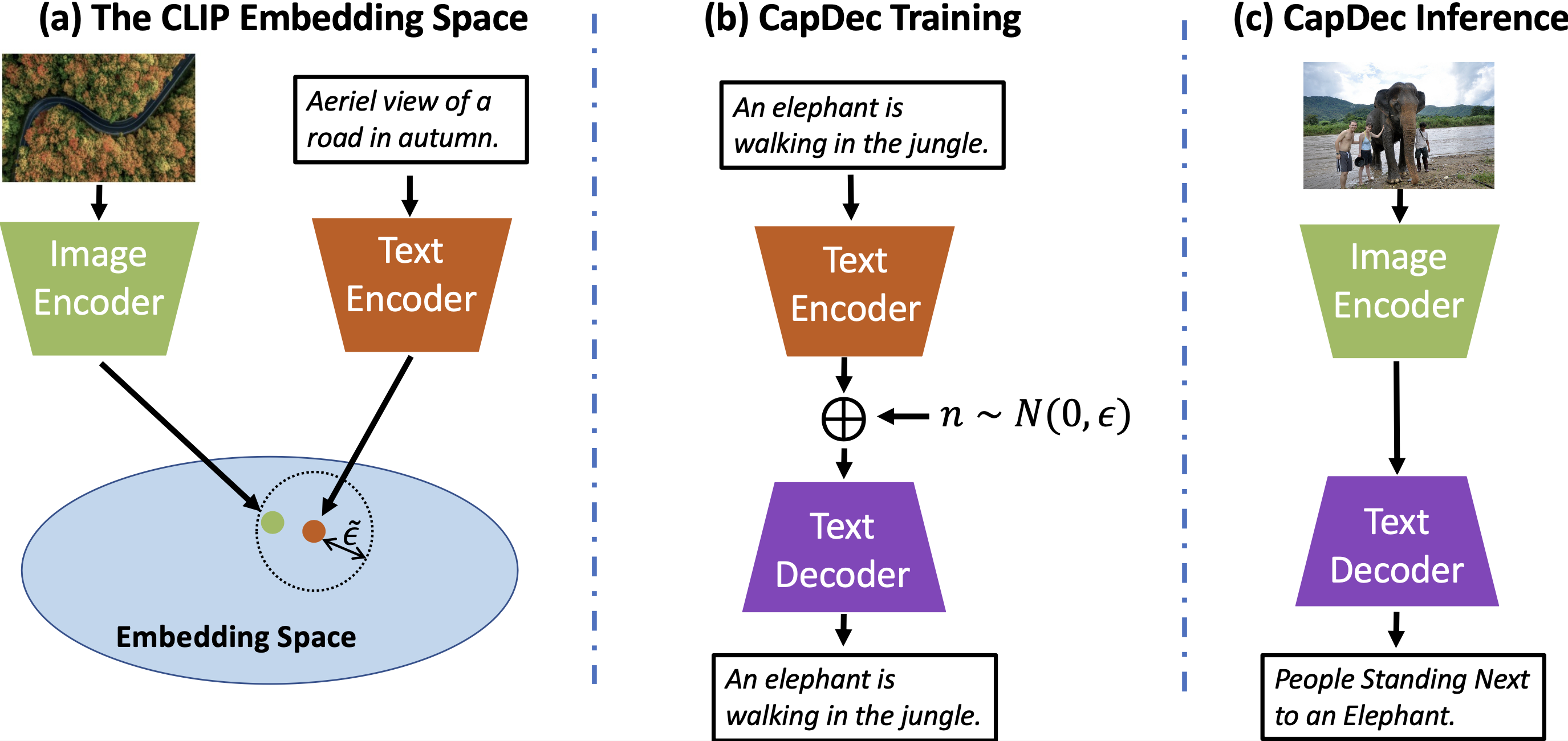

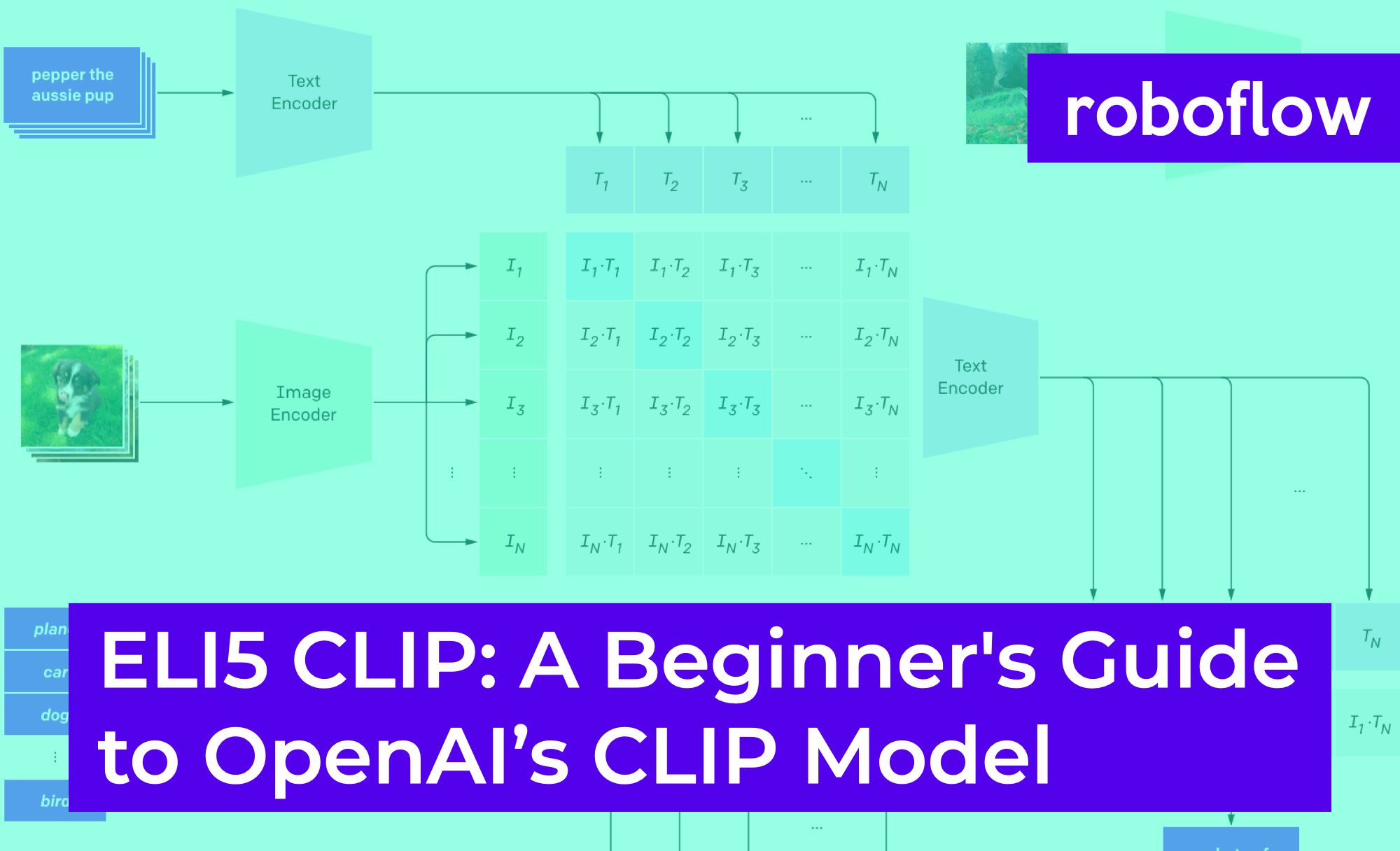

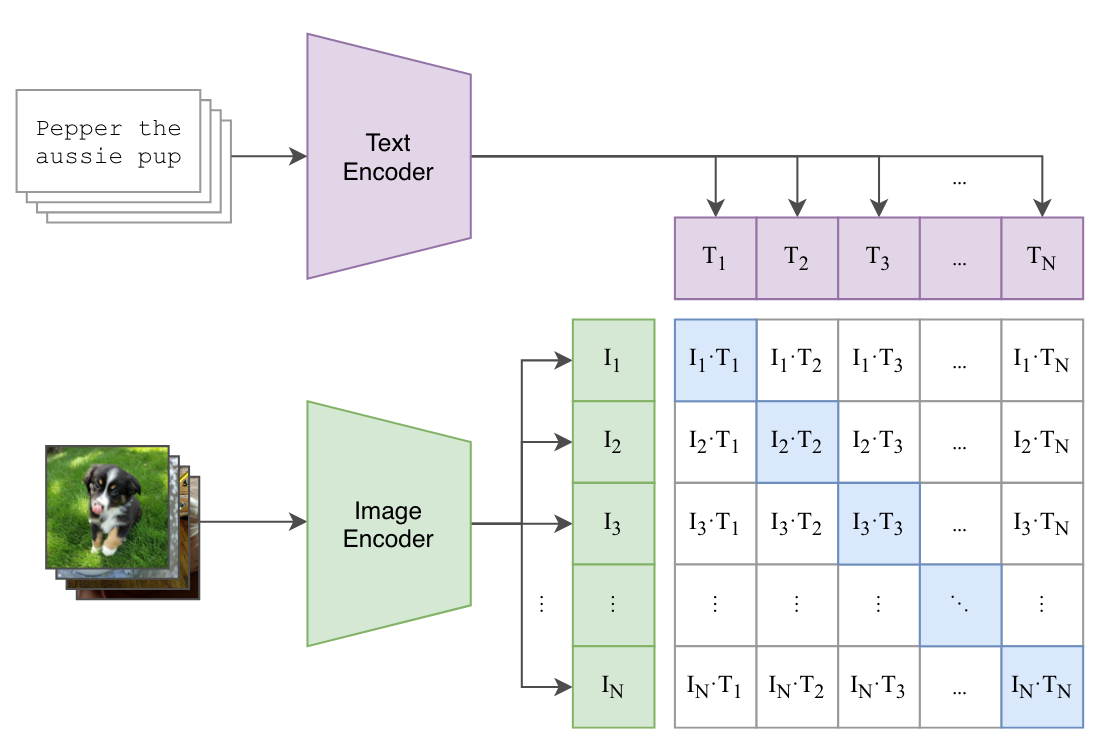

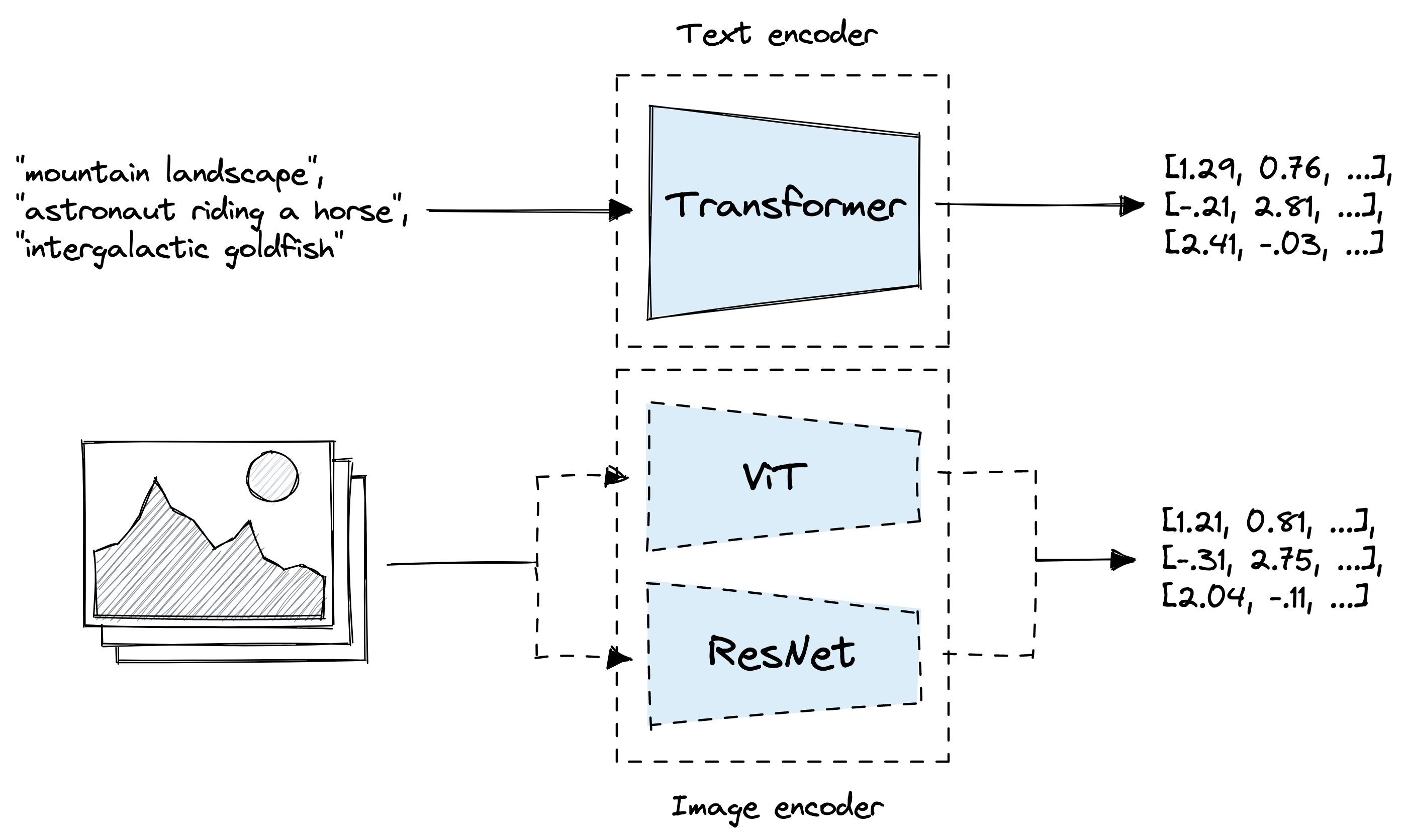

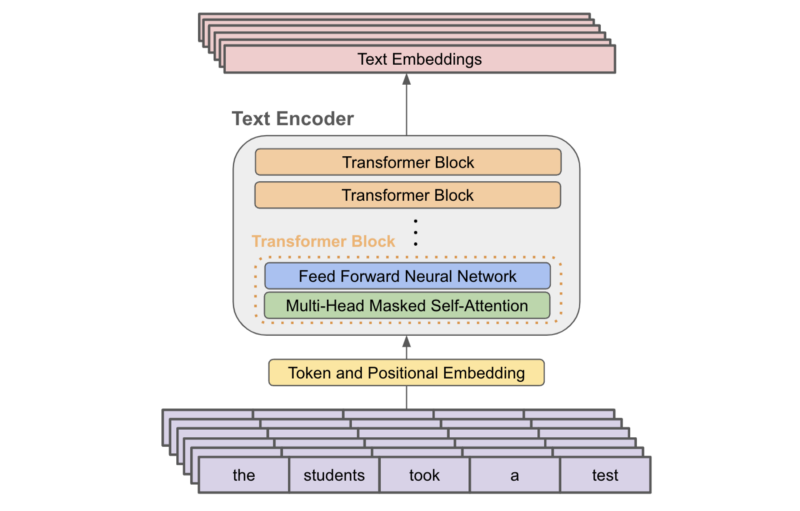

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram

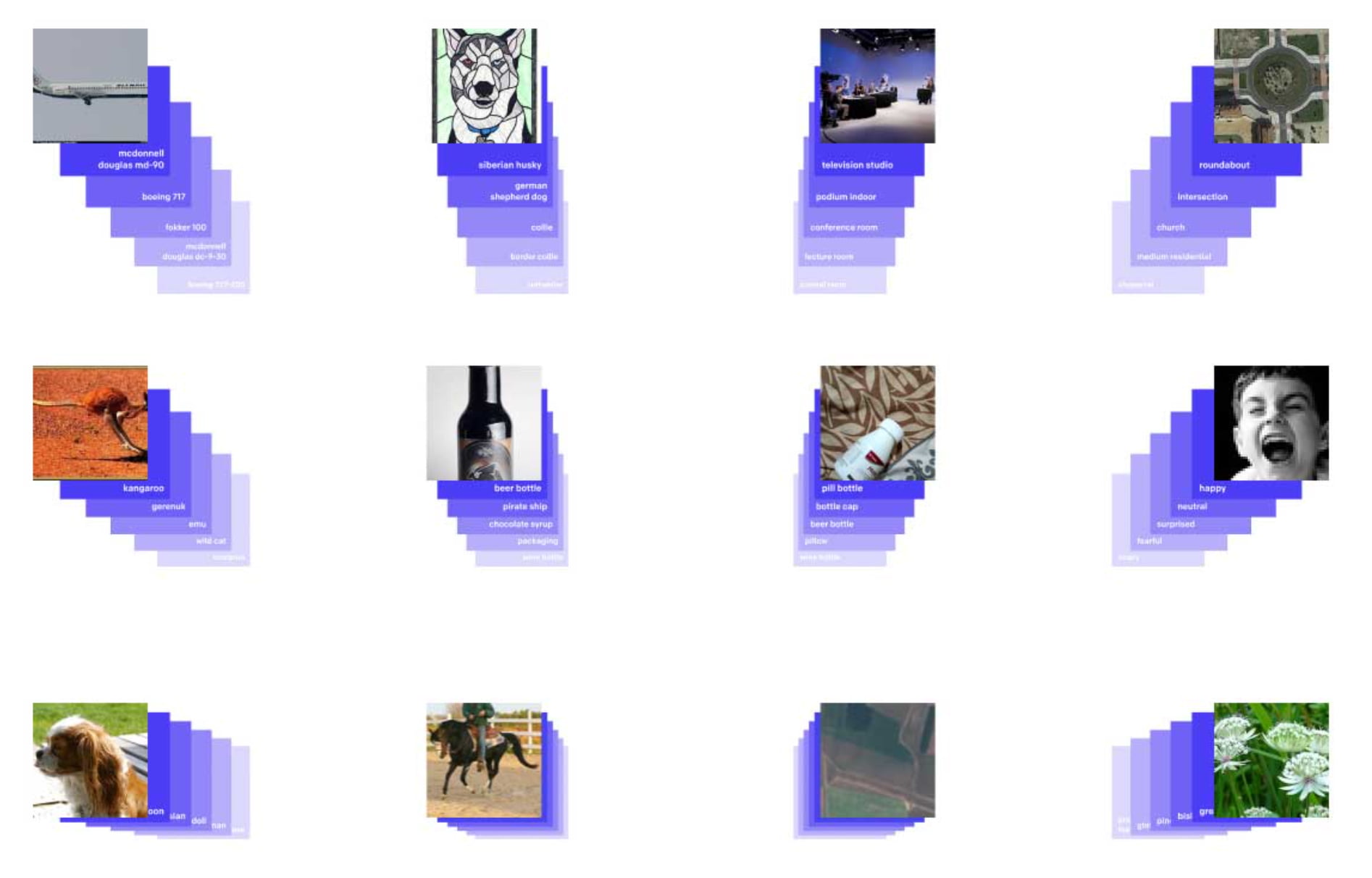

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

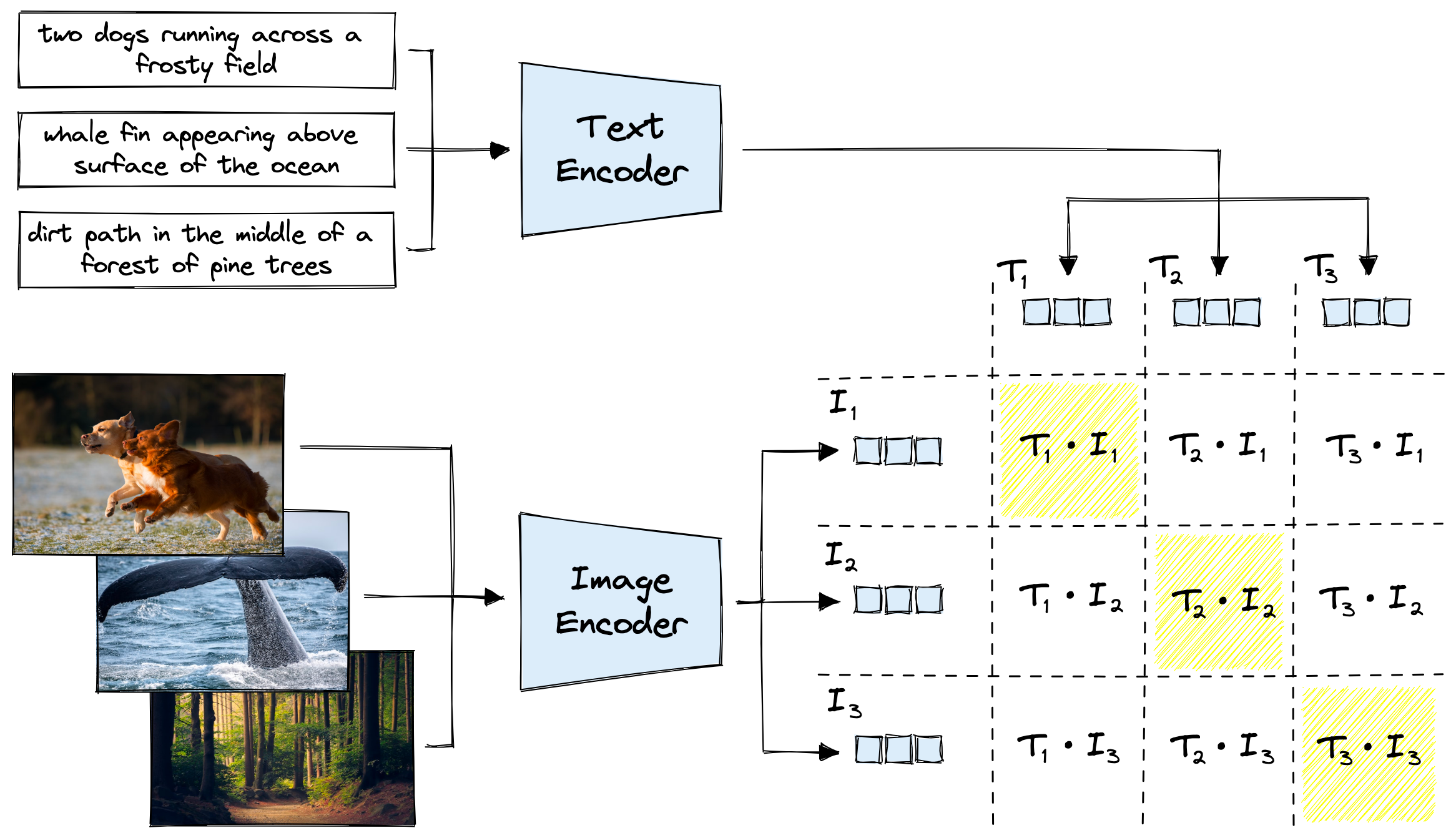

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

MaMMUT: A simple vision-encoder text-decoder architecture for multimodal tasks – Google Research Blog

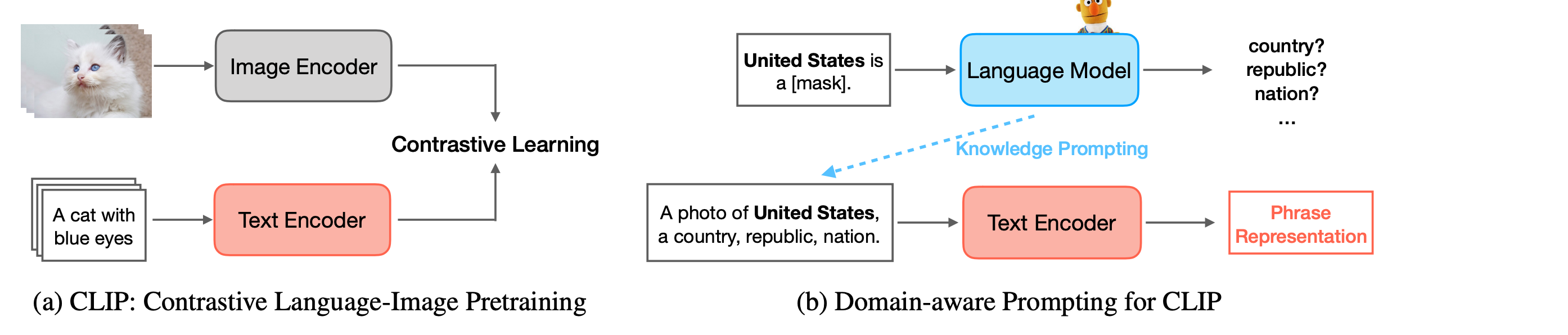

Overview of VT-CLIP where text encoder and visual encoder refers to the... | Download Scientific Diagram

Romain Beaumont on X: "@AccountForAI and I trained a better multilingual encoder aligned with openai clip vit-l/14 image encoder. https://t.co/xTgpUUWG9Z 1/6 https://t.co/ag1SfCeJJj" / X