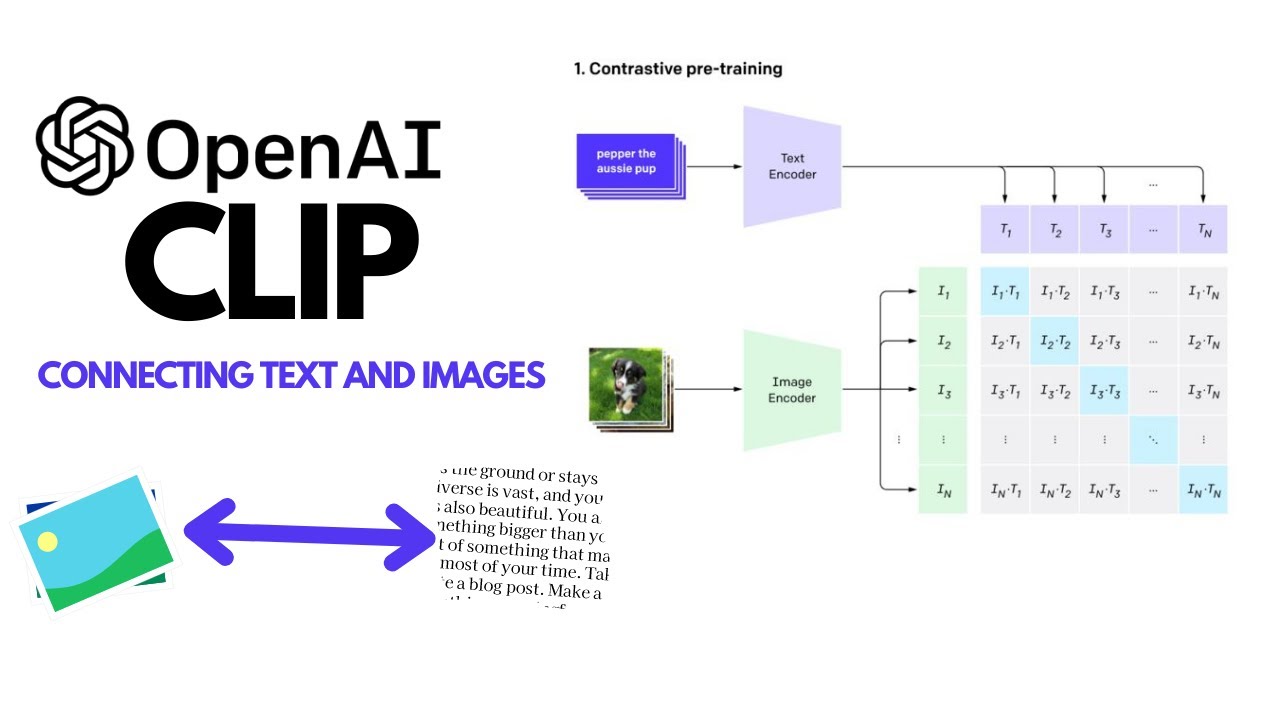

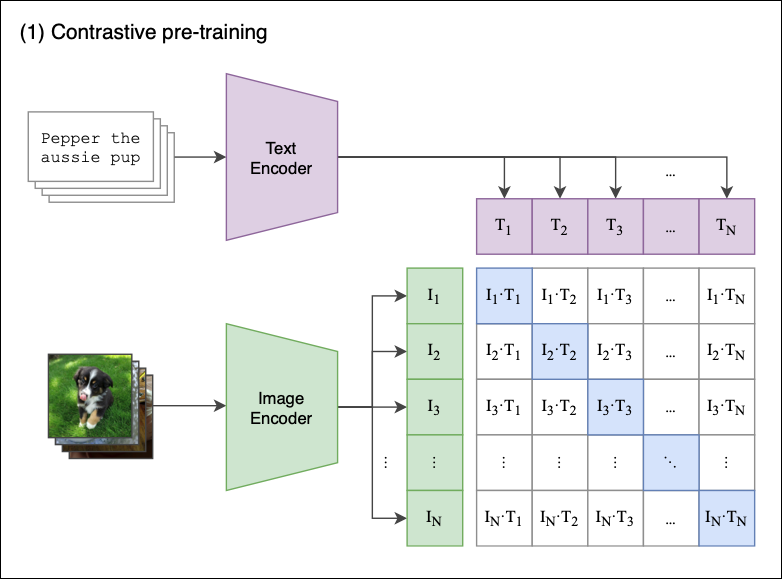

Contrastive Language–Image Pre-training (CLIP)-Connecting Text to Image | by Sthanikam Santhosh | Medium

openai's CLIP model not working with pytorch 1.12 in some environments · Issue #17974 · huggingface/transformers · GitHub

![P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search](https://external-preview.redd.it/W9YcFgBnfZDMlabAtrfk4CNq8IjFz7gmrlOz2NkSIKs.png?format=pjpg&auto=webp&s=7617eef5cbad7a9c0399650933d416ae43c14740)

P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search

GitHub - huggingface/pytorch-image-models: PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, NFNet, Vision Transformer (ViT), MobileNet-V3/V2, RegNet, DPN, CSPNet, Swin Transformer, MaxViT, CoAtNet, ConvNeXt, and more

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

Zero-shot Image Classification with OpenAI CLIP and OpenVINO™ — OpenVINO™ documentationCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to clipboardCopy to ...

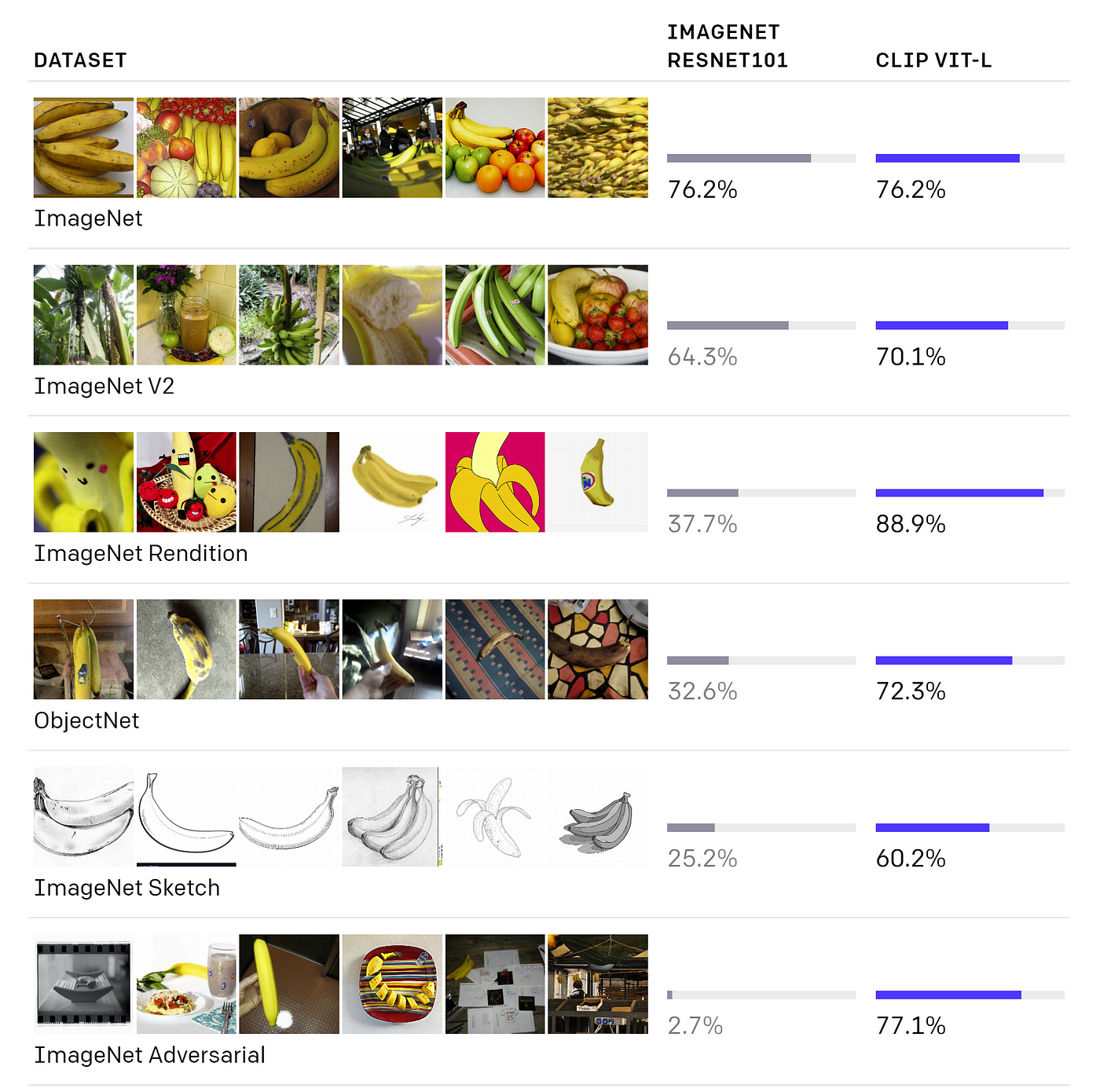

Using CLIP to Classify Images without any Labels | by Cameron R. Wolfe, Ph.D. | Towards Data Science

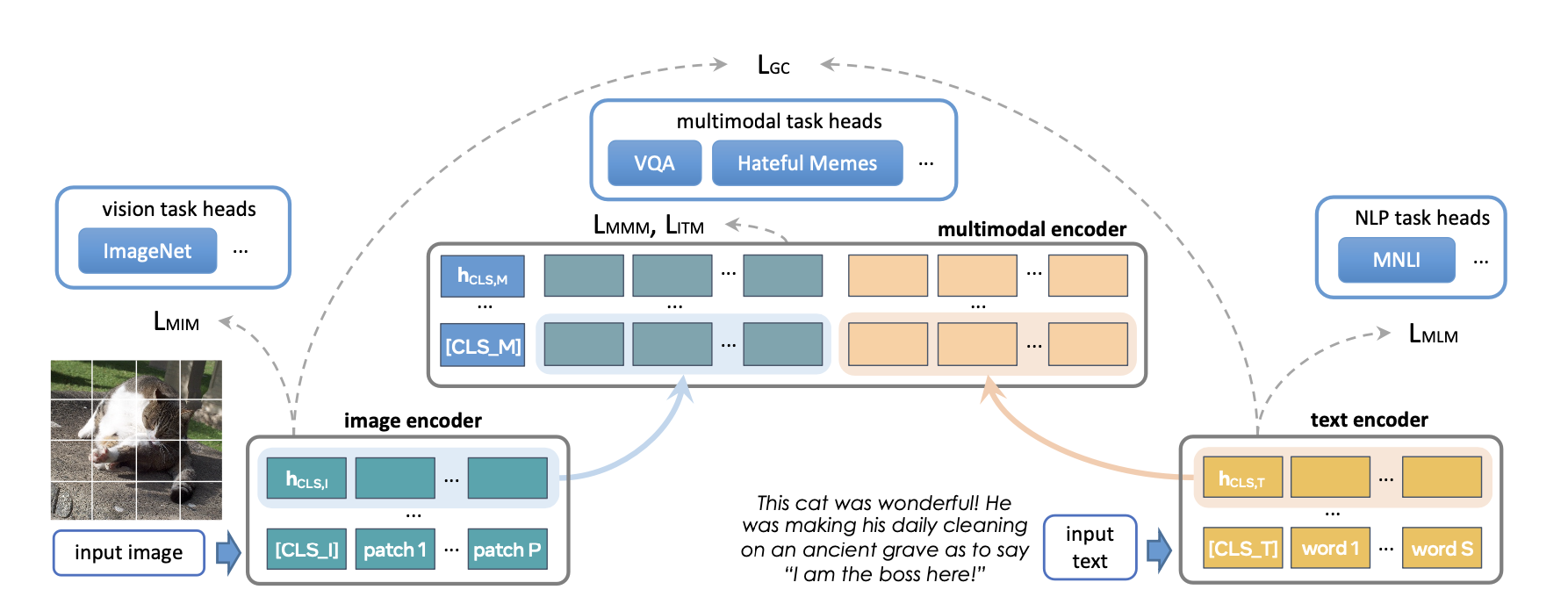

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science