Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost

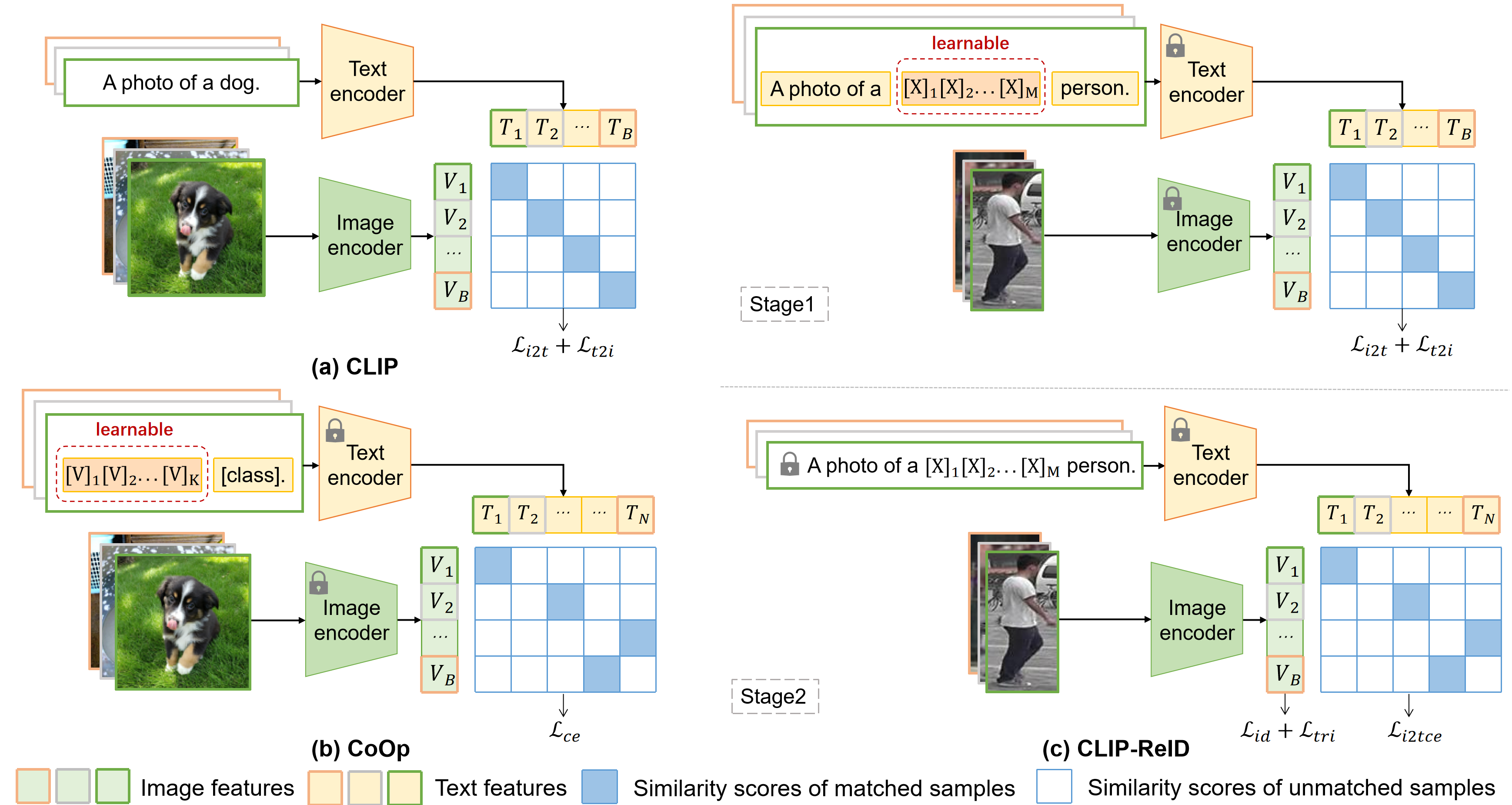

CLIP-ReID: Exploiting Vision-Language Model for Image Re-Identification without Concrete Text Labels | Papers With Code

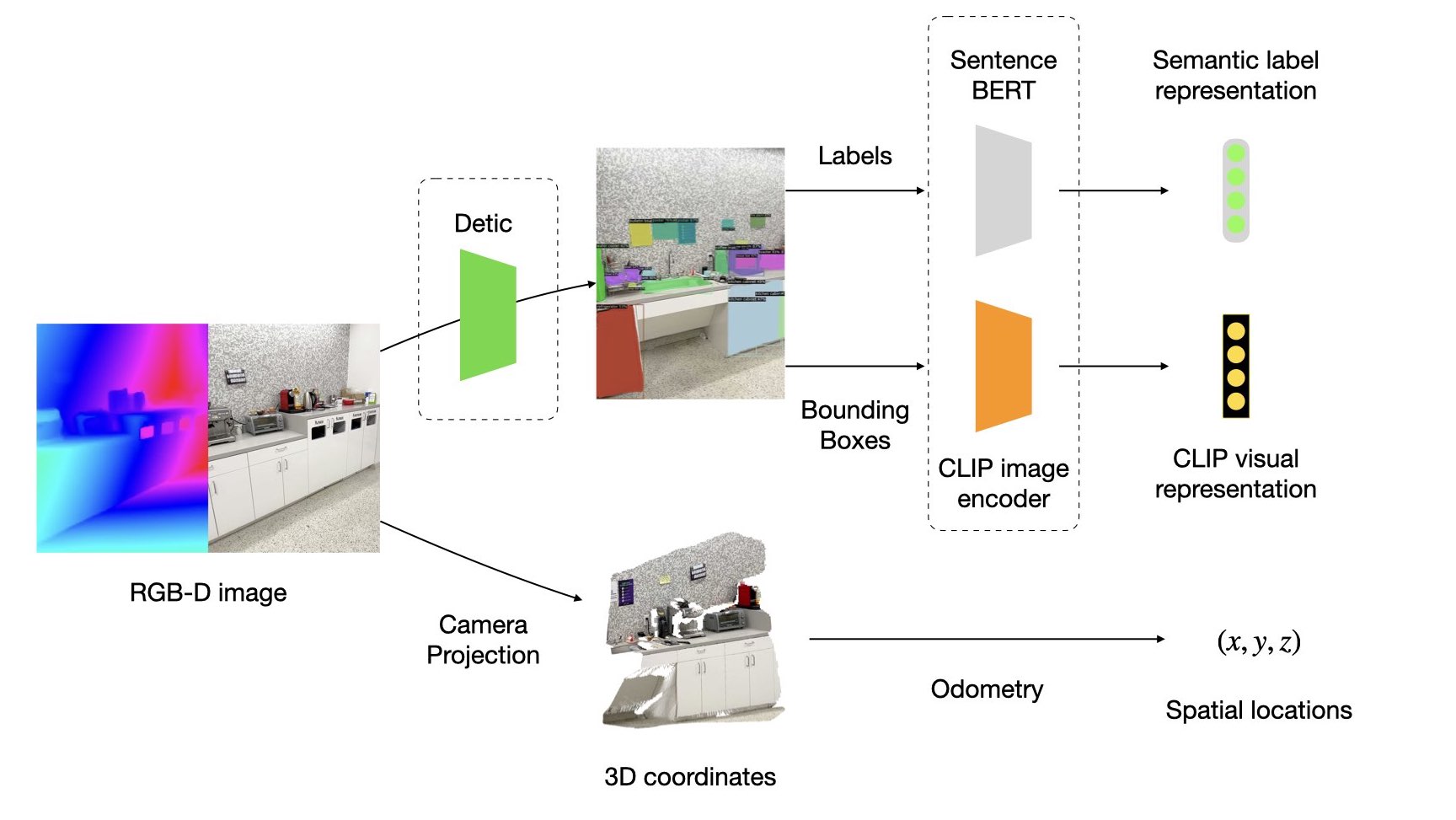

Overview of our method. The image is encoded into a feature map by the... | Download Scientific Diagram

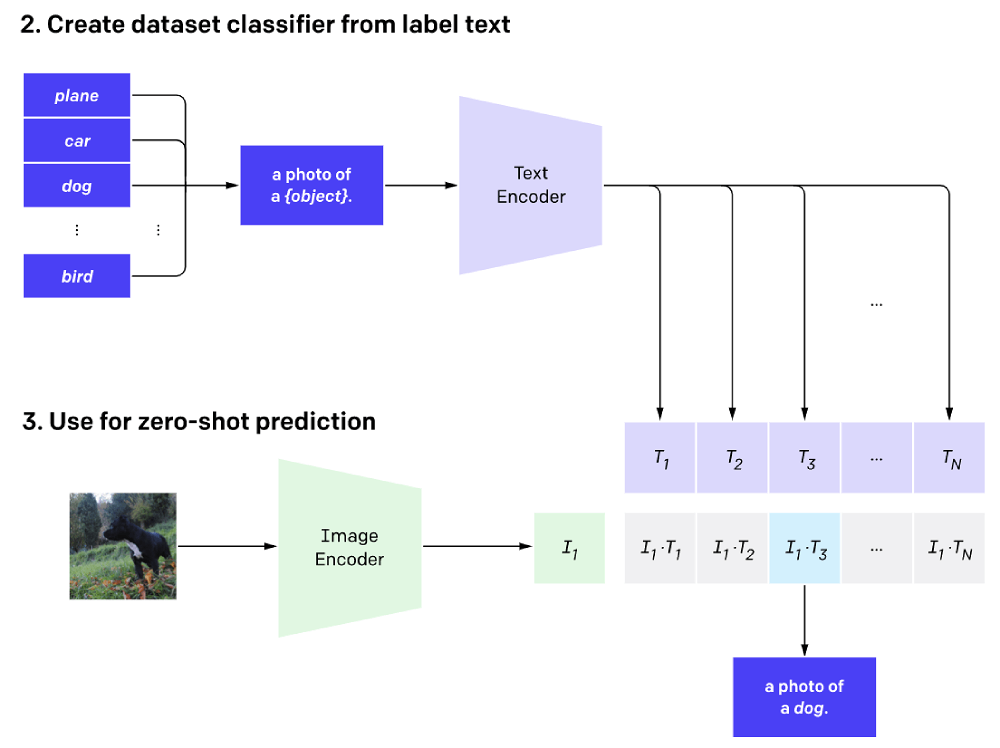

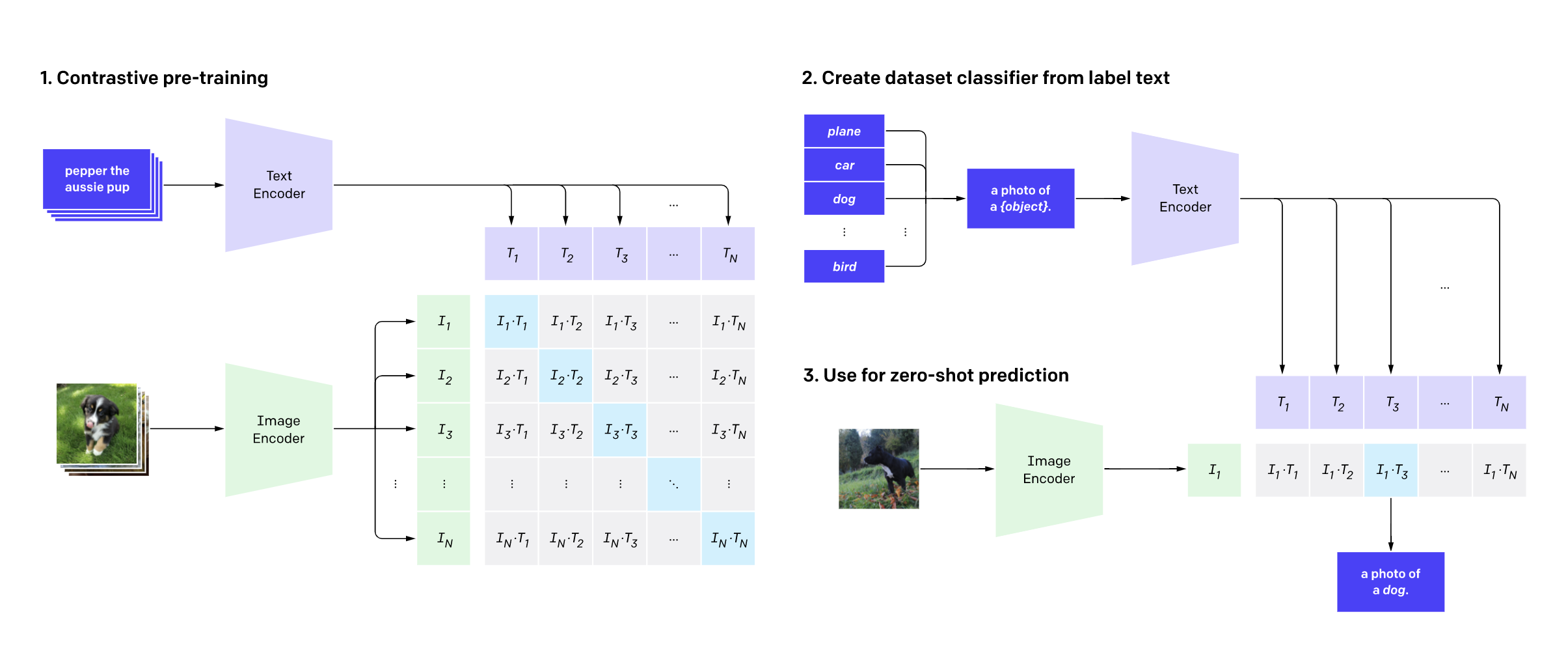

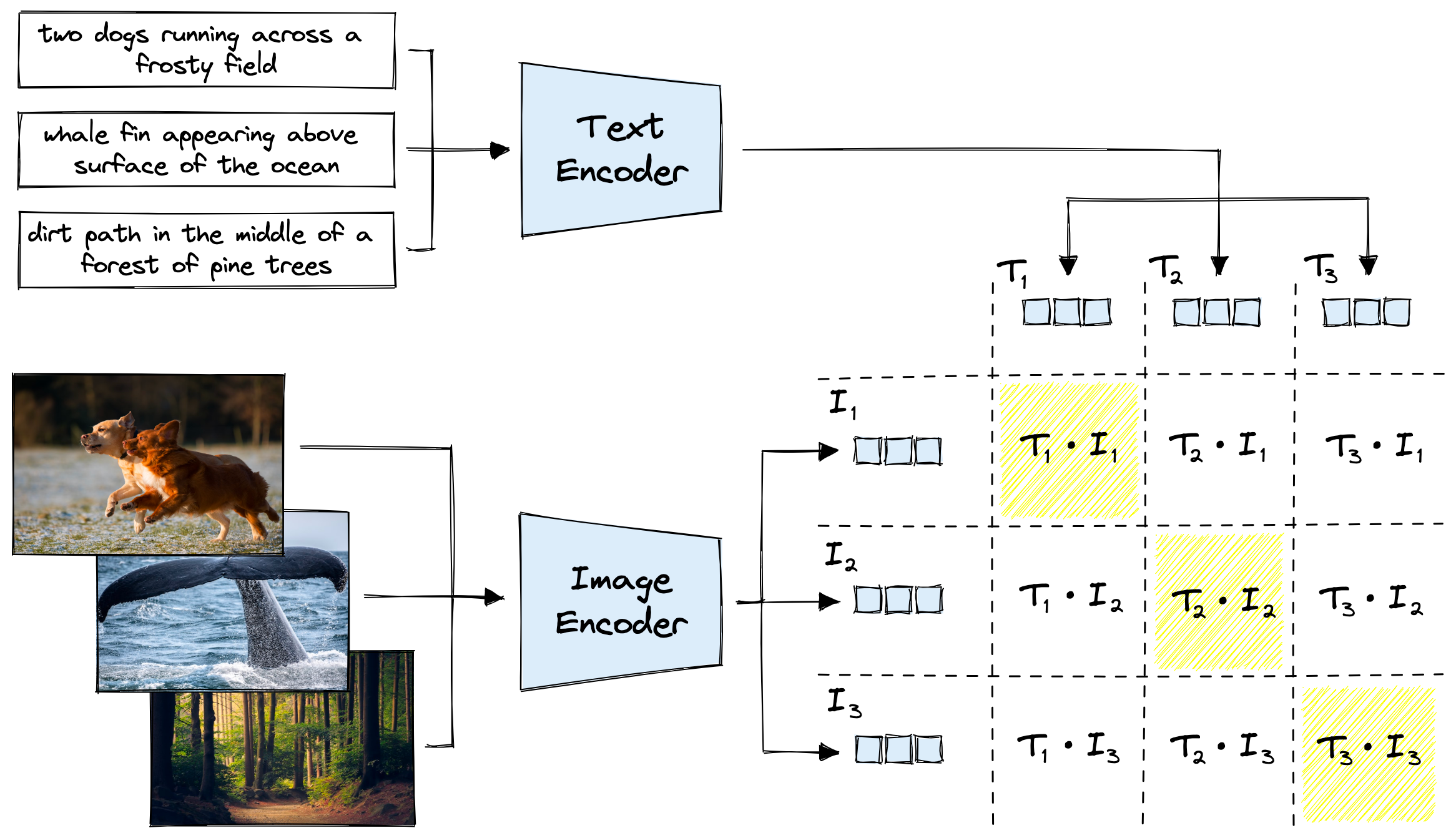

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

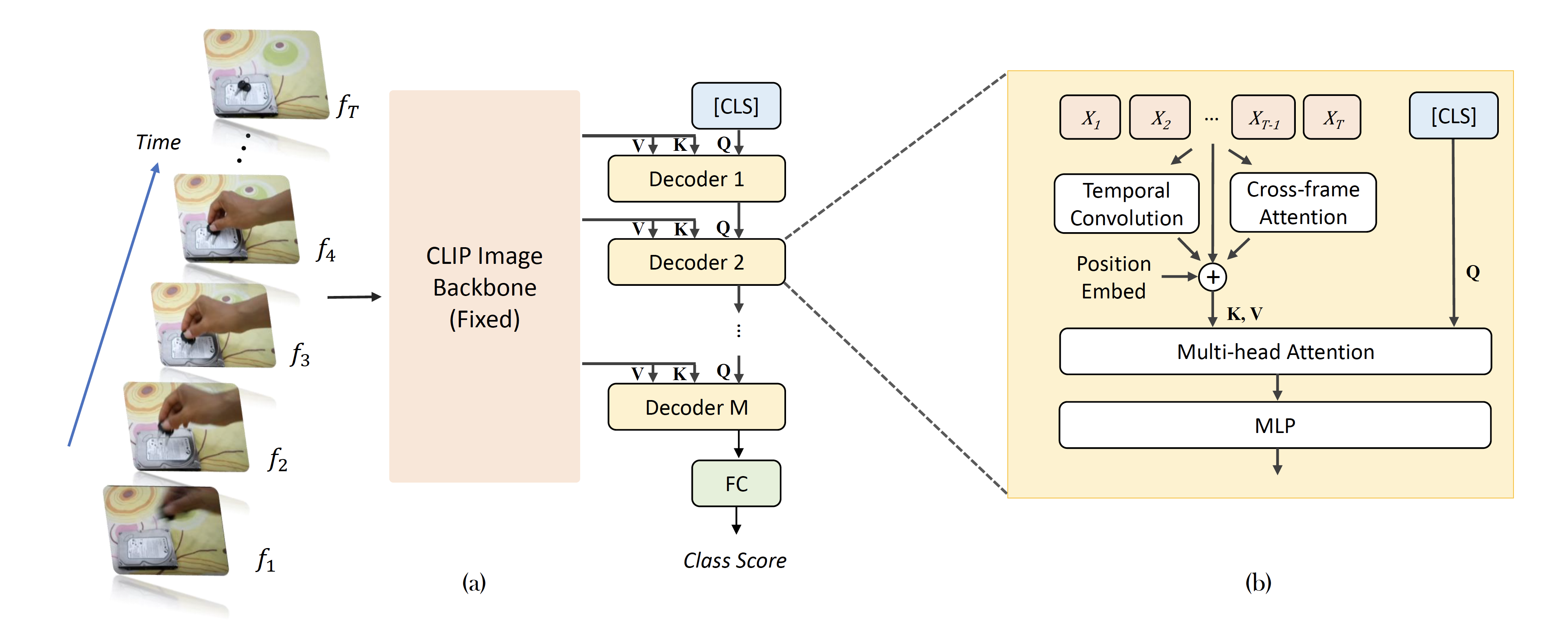

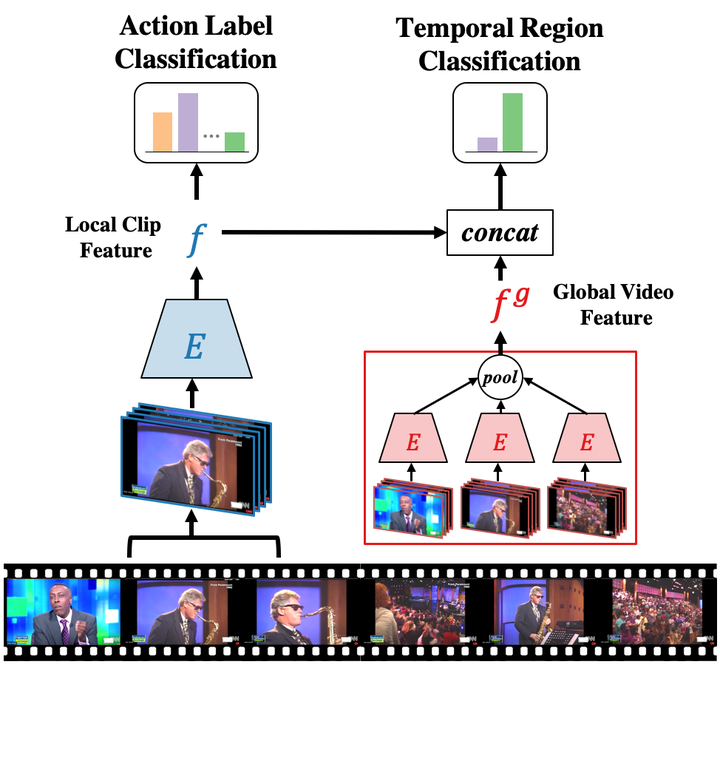

Overview of VT-CLIP where text encoder and visual encoder refers to the... | Download Scientific Diagram

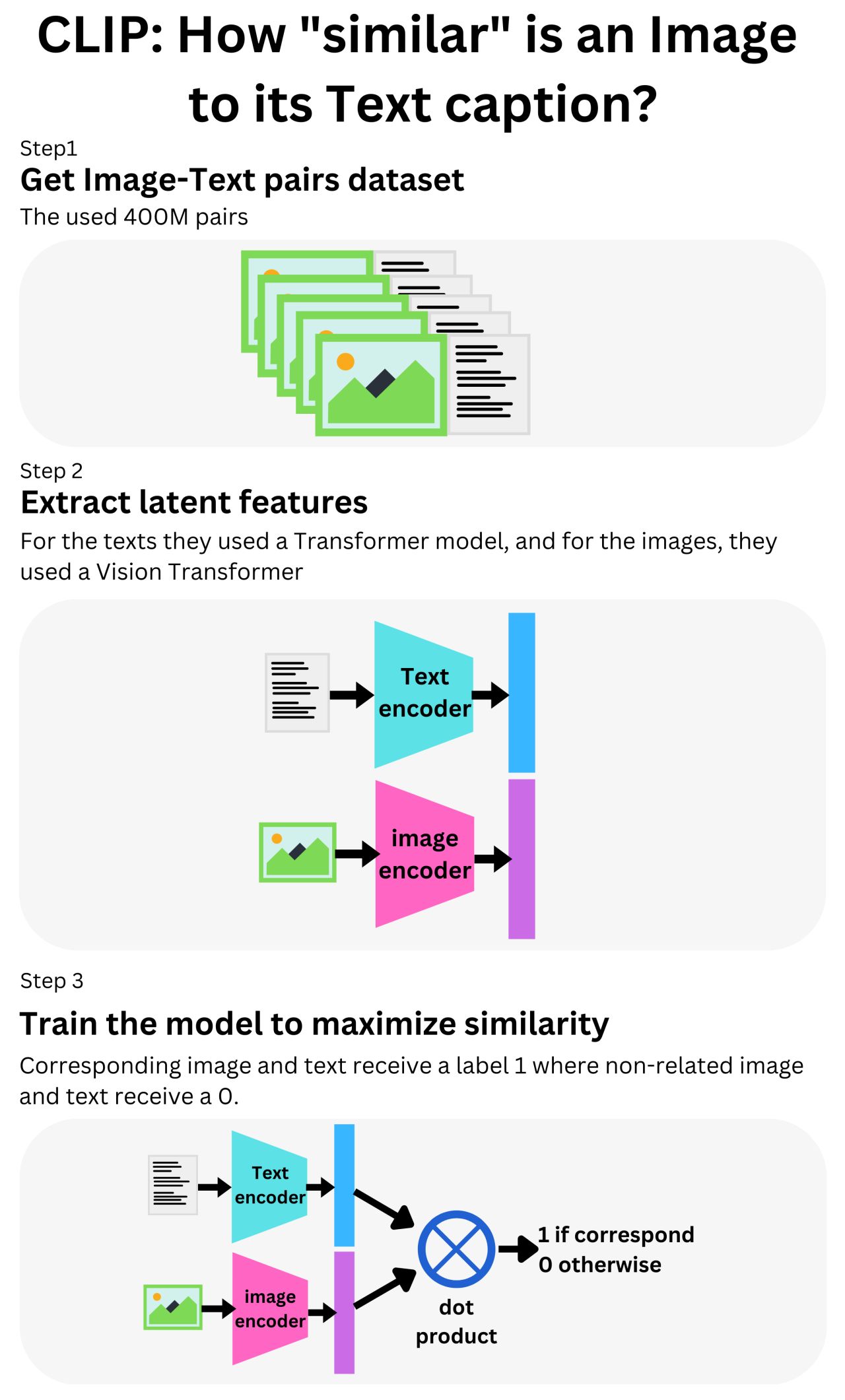

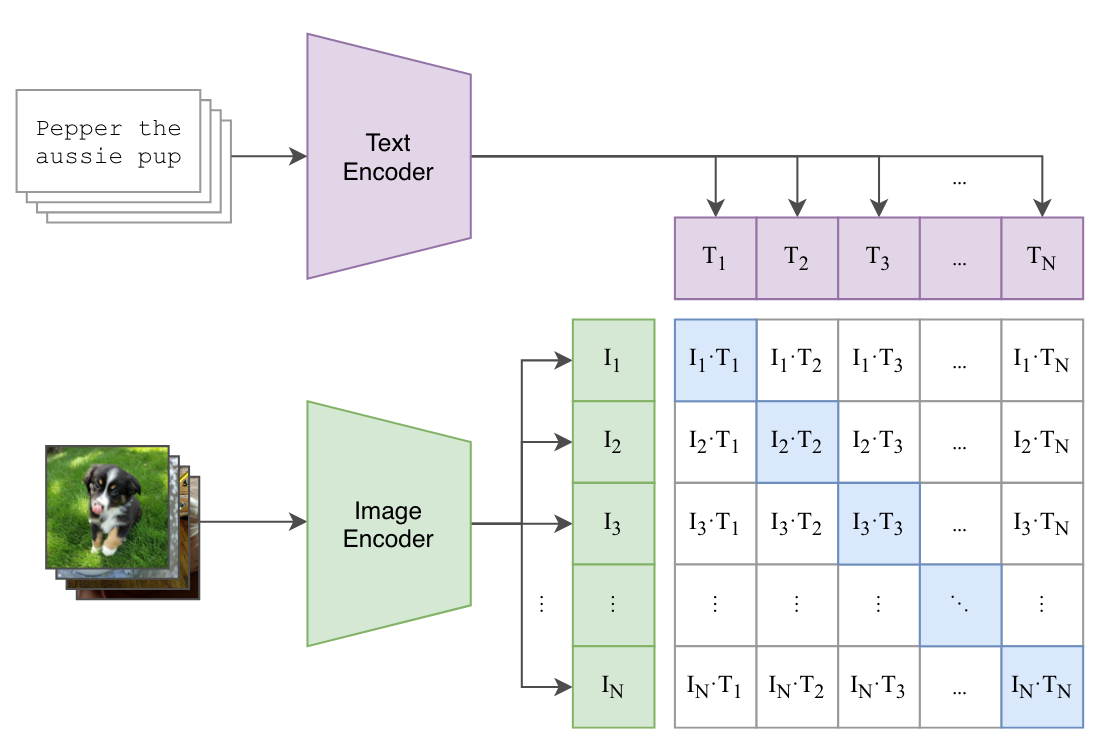

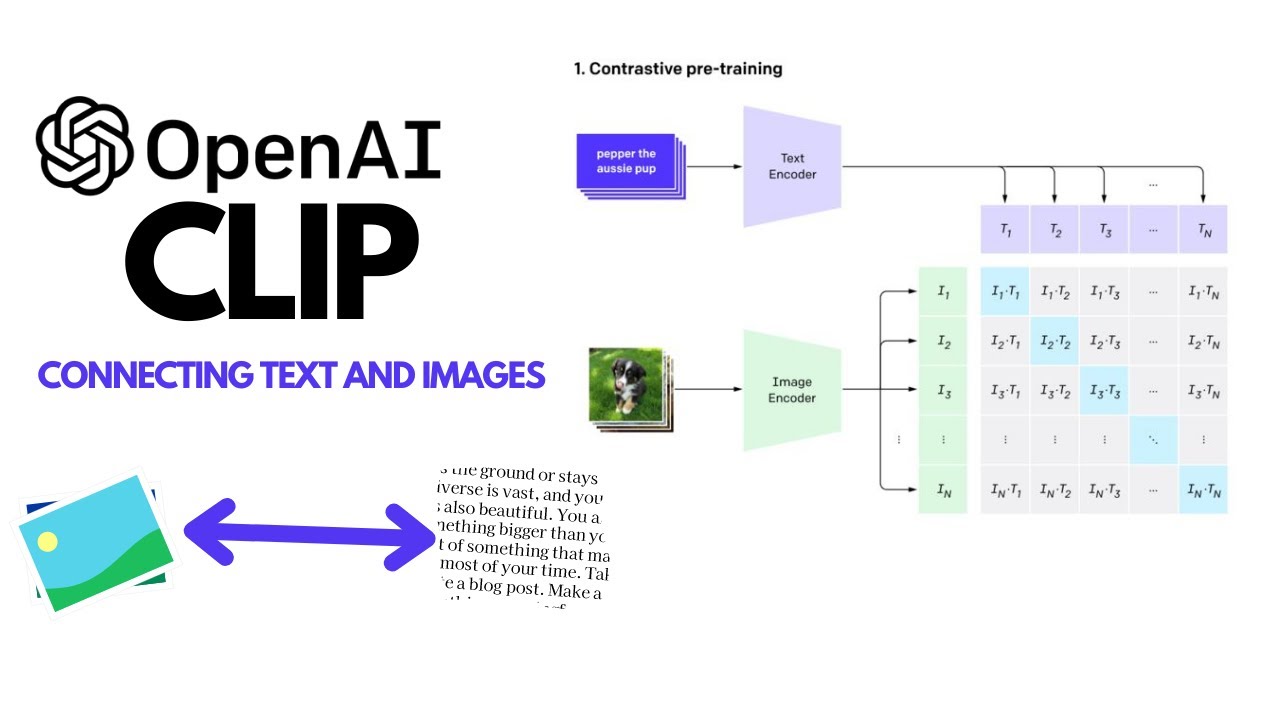

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube