GitHub - jmisilo/clip-gpt-captioning: CLIPxGPT Captioner is Image Captioning Model based on OpenAI's CLIP and GPT-2.

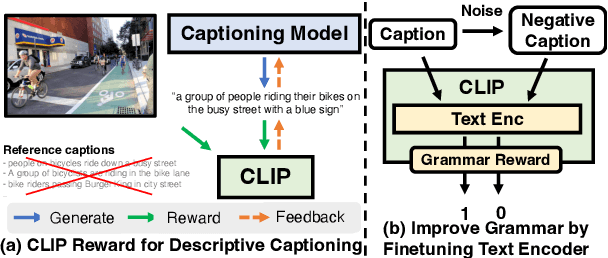

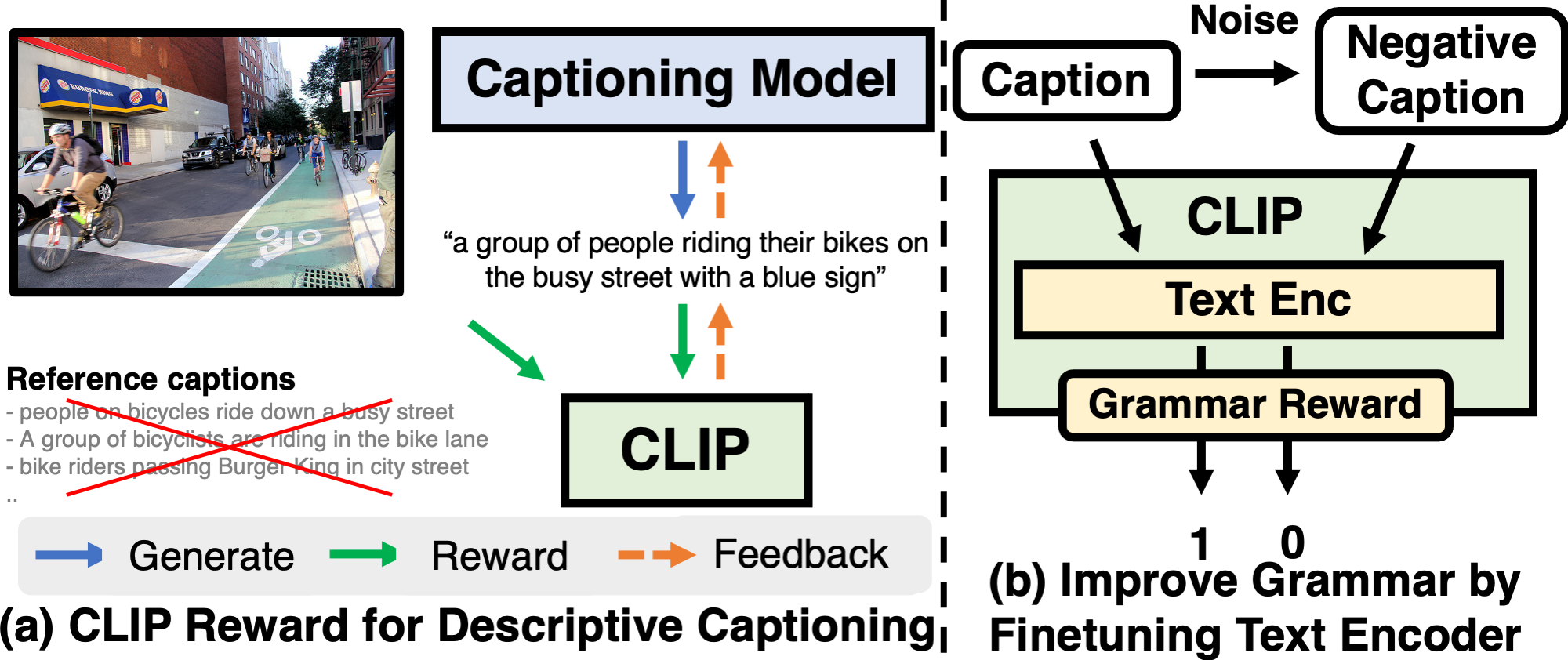

Adobe AI Researchers Open-Source Image Captioning AI CLIP-S: An Image- Captioning AI Model That Produces Fine-Grained Descriptions of Images - MarkTechPost

Image Captioning. In the realm of multimodal learning… | by Pauline Ornela MEGNE CHOUDJA | MLearning.ai | Medium

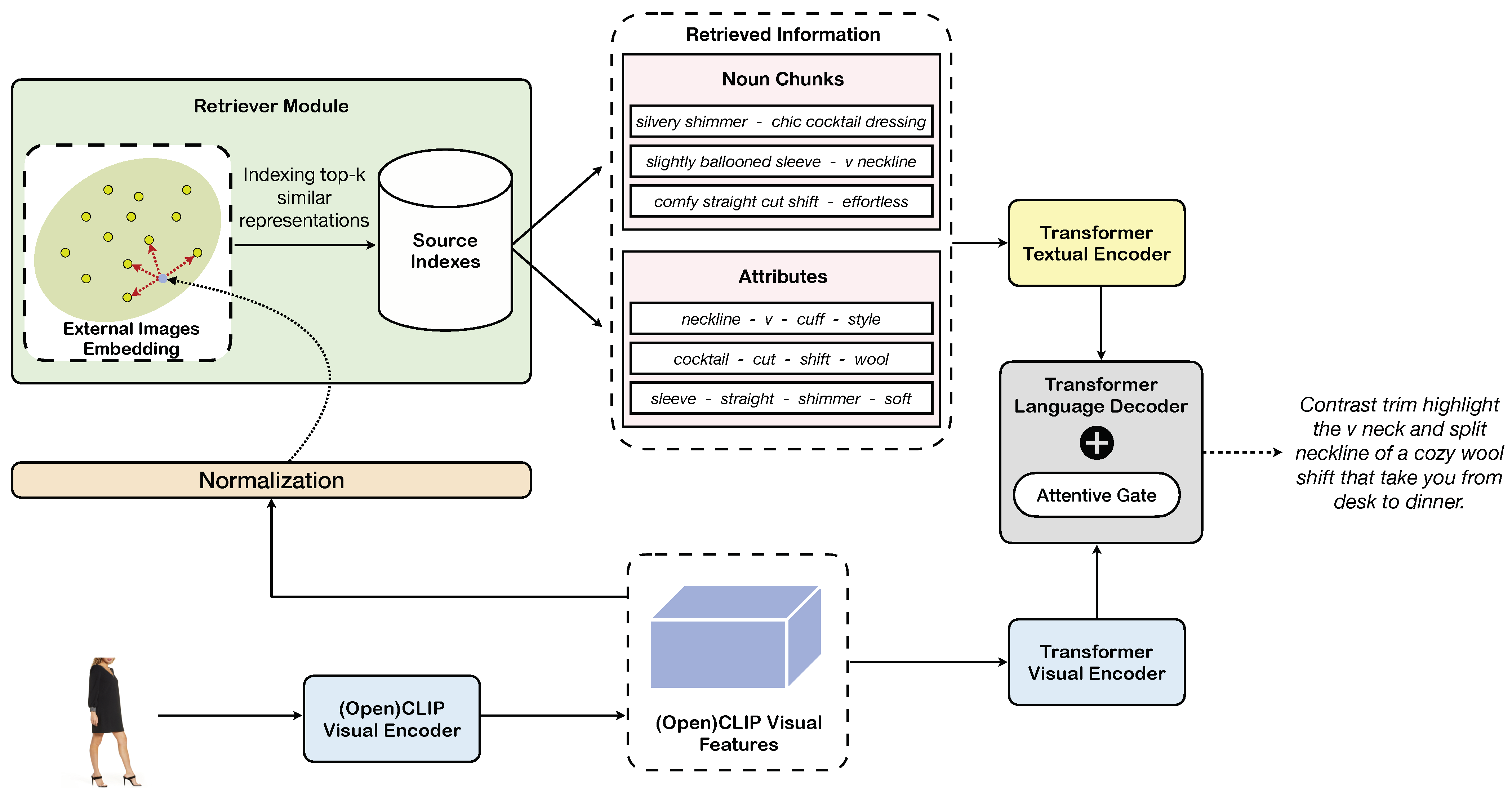

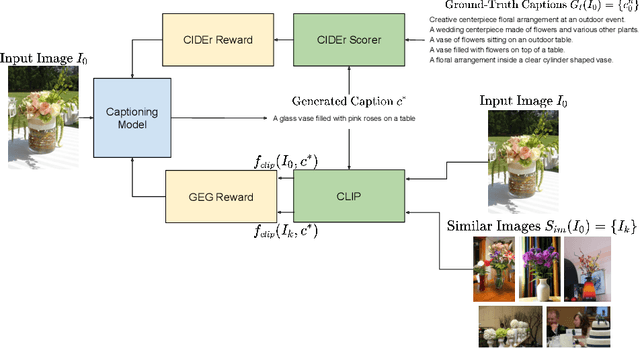

Sensors | Free Full-Text | Fashion-Oriented Image Captioning with External Knowledge Retrieval and Fully Attentive Gates

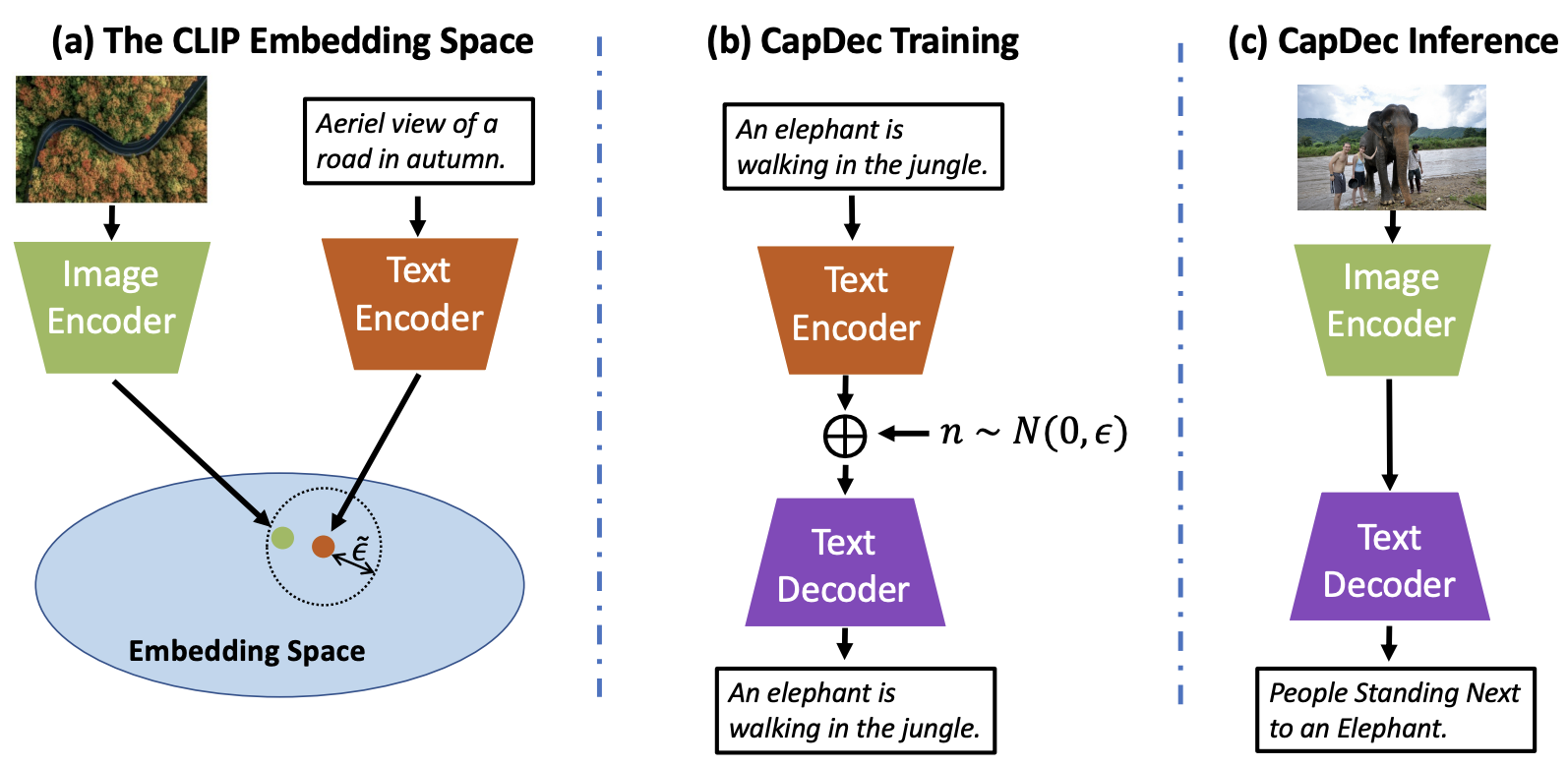

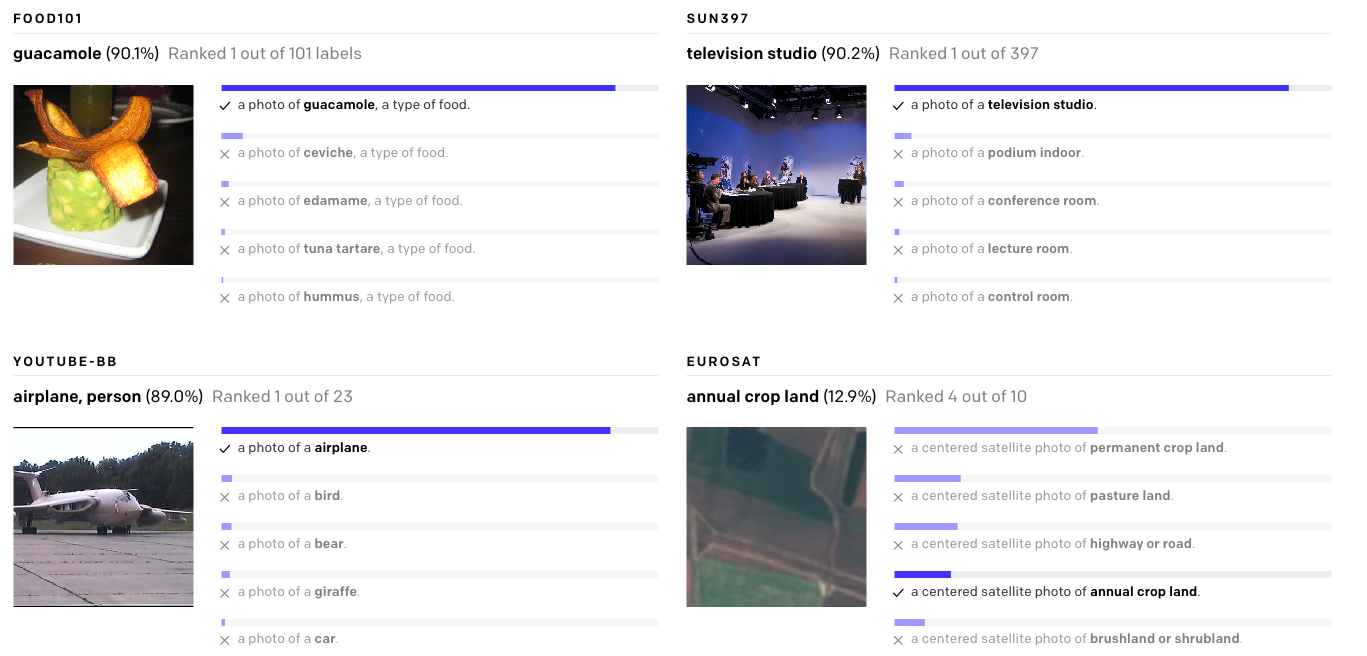

![PDF] ClipCap: CLIP Prefix for Image Captioning | Semantic Scholar PDF] ClipCap: CLIP Prefix for Image Captioning | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/a7aa150b55d64d339b1c154d6d88455fc3cbc44f/6-Figure5-1.png)

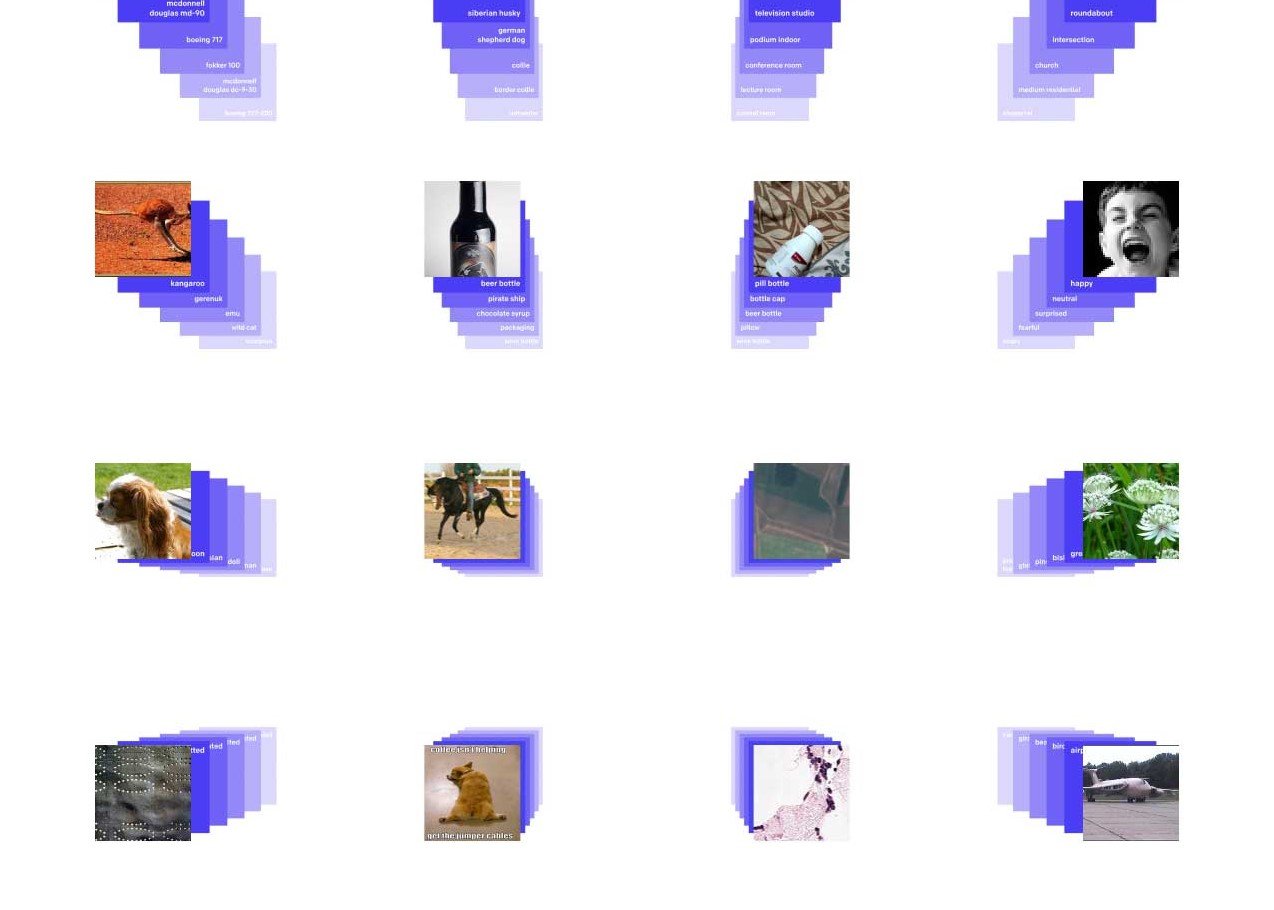

![PDF] Distinctive Image Captioning via CLIP Guided Group Optimization | Semantic Scholar PDF] Distinctive Image Captioning via CLIP Guided Group Optimization | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/fea5d9d734426a0049d082fc230c7da14f0bc27a/4-Figure1-1.png)

![P] Fast and Simple Image Captioning model using CLIP and GPT-2 : r/MachineLearning P] Fast and Simple Image Captioning model using CLIP and GPT-2 : r/MachineLearning](https://preview.redd.it/9u29mfdi98s71.png?width=1720&format=png&auto=webp&s=b68b83ab7ea42f507af01d70ede3a70cea82826e)

![PDF] ClipCap: CLIP Prefix for Image Captioning | Semantic Scholar PDF] ClipCap: CLIP Prefix for Image Captioning | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/a7aa150b55d64d339b1c154d6d88455fc3cbc44f/1-Figure1-1.png)

![P] Fast and Simple Image Captioning model using CLIP and GPT-2 : r/MachineLearning P] Fast and Simple Image Captioning model using CLIP and GPT-2 : r/MachineLearning](https://preview.redd.it/0ueotedi98s71.png?width=1714&format=png&auto=webp&s=1cdf8dae57770380337c718fbabf6f58ab45a26d)