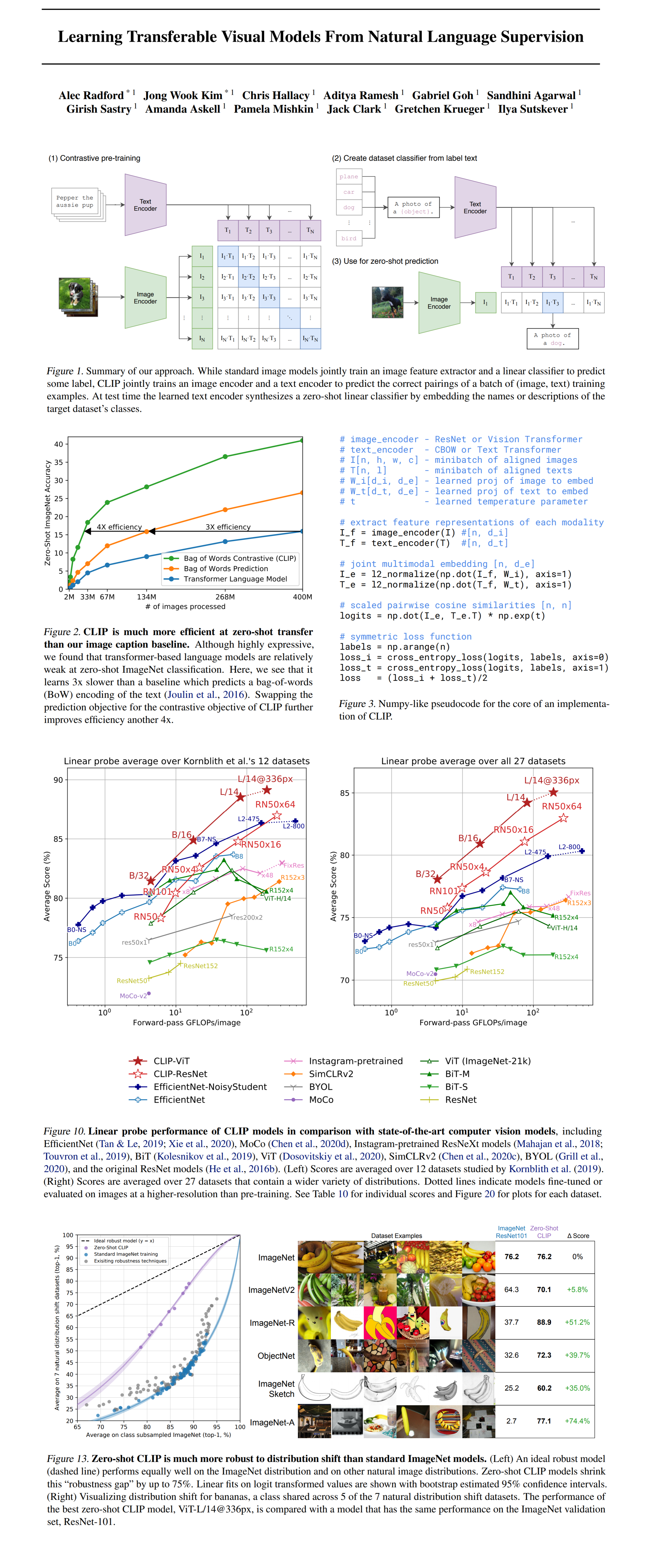

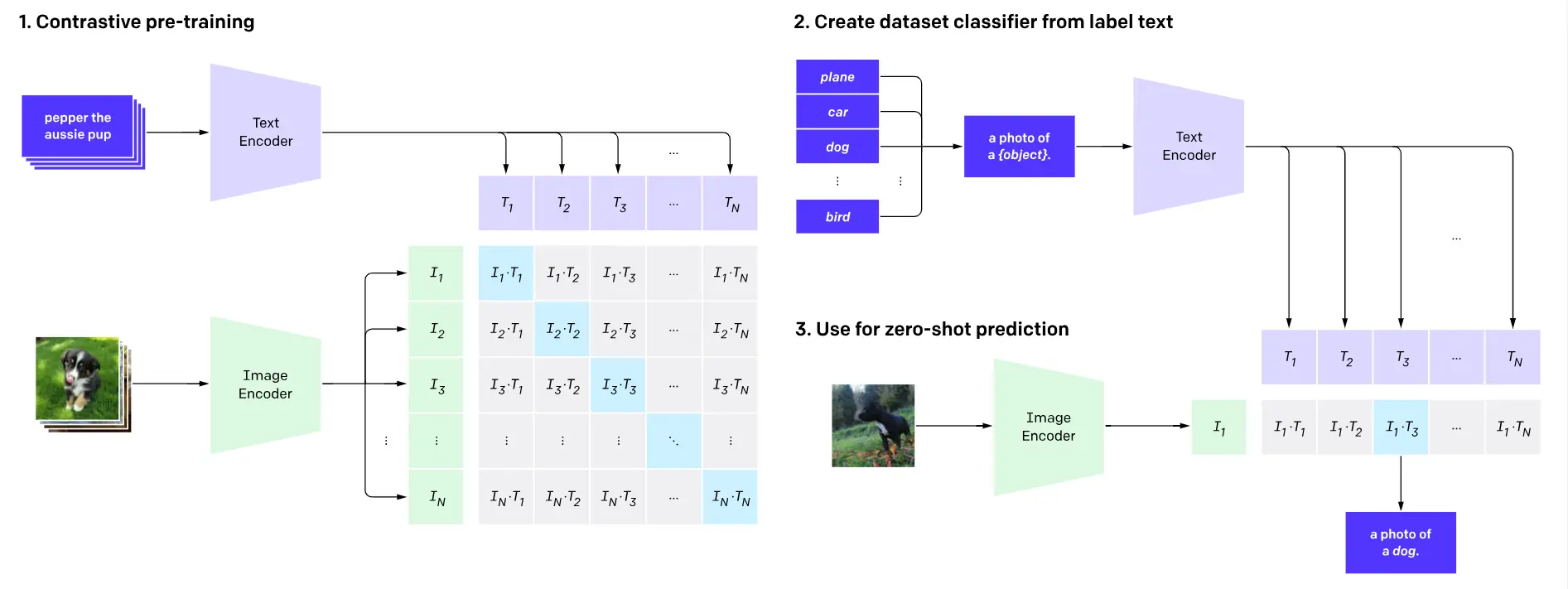

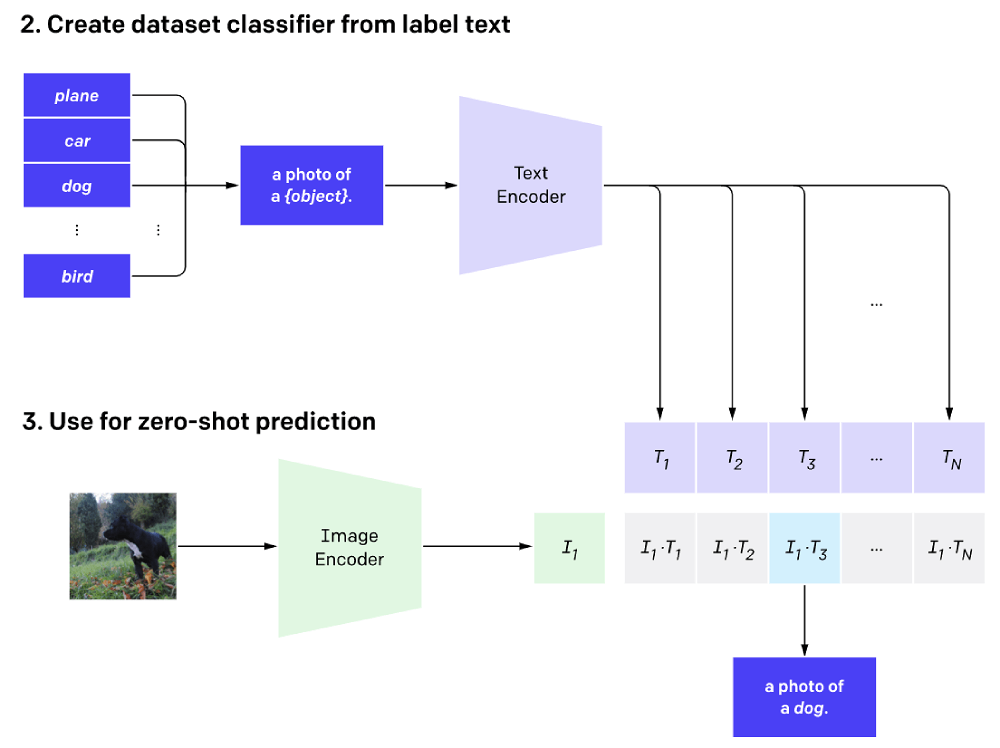

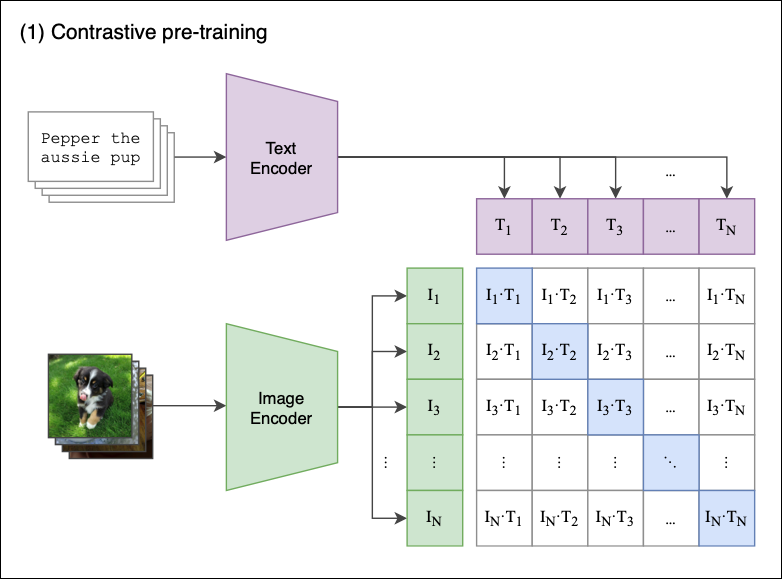

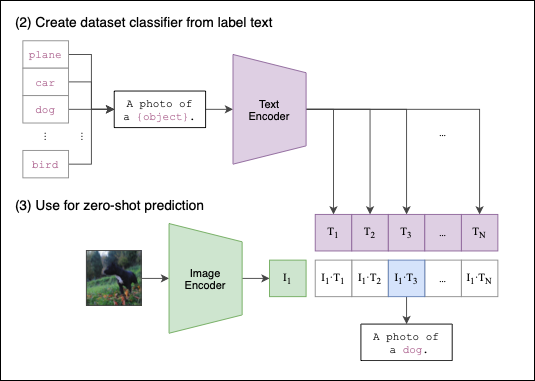

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science